Detailing the evolution of our reporting system to make it better and easier for you on PlayStation

Following on from the introduction of ‘Play Time’ in our recent 5.5 System Software Update – and as the first in a series of weekly articles – we’re going to start looking at the ways we can help you stay safe on the PlayStation Network.

Specifically, we’re going to focus on how the reporting mechanisms have been developed, allowing you to tell us in more detail than ever about the user-generated content you don’t want to see on PSN.

How our reporting practices changed back in 2016

In 2016 Safety and Moderation teams undertook a wholesale review of the report options available to players on PSN.

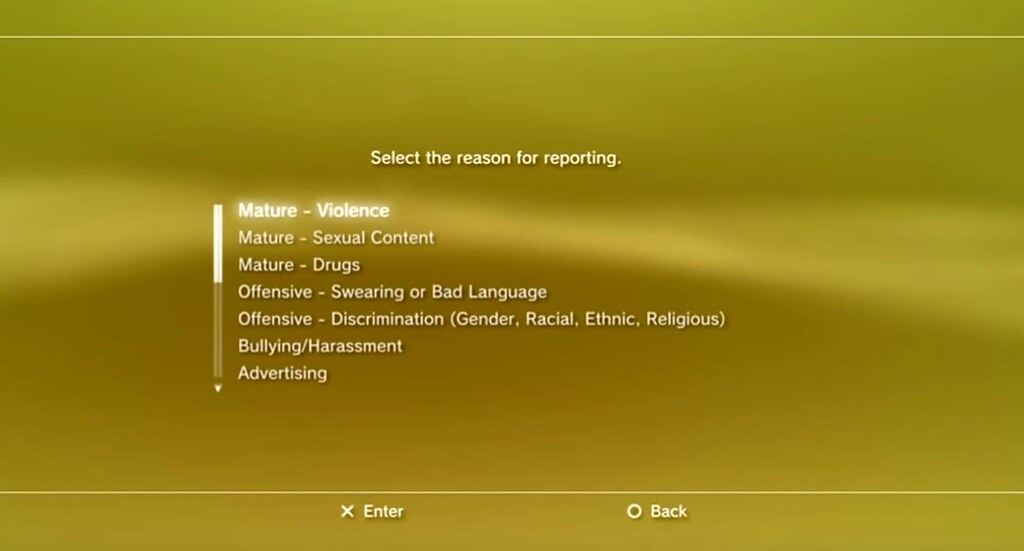

You might remember what these looked like back on PlayStation 3 (below) – in those days we used the term ‘grief report’ – and, while these did the job back in the day, they were very much of their time.

For PlayStation 4 generation – and to reflect the wealth of social features that came with that – we felt reporting was in need of a refresh.

What we did to research the reporting system

- Extensive data analysis of reports submitted by PSN users – what were you reporting, why and where?

- Research into the emerging trends in this field, both in terms of online gaming and social media – was there anything we could learn from or ideas that would spark our imaginations?

- Finally, and in order to ensure what we ended up with reflected your views, we reached out to our users on the official PlayStation forums and beta trial community.

Rolling out the updated reporting system in System Update 4.00…

The final spec we initially rolled out in System Software Update 4.00 had some real standout features:

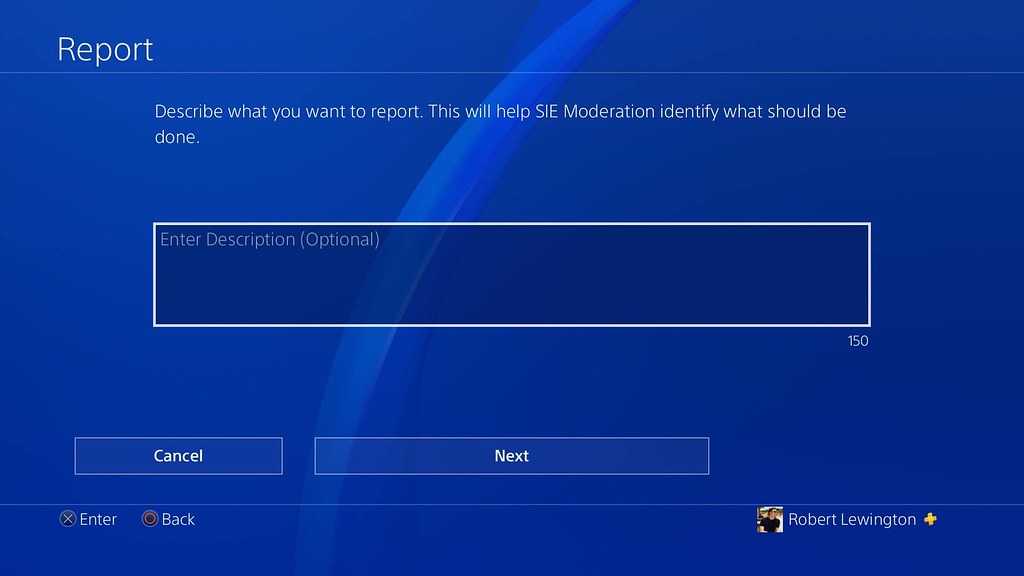

- We now gave you multi-layered reasons, to allow players to be more specific about exactly what the report was about

- We provided more information about how reporting works, explaining when to use which option and how a human manually reviews every report we receive

- We gave you some options to quickly resolve your issue – for example, we created easier and more intuitive ways to block other players who, for whatever reason, you preferred not to communicate with

- We even gave you a free text box so you could explain the issue to us in your own words

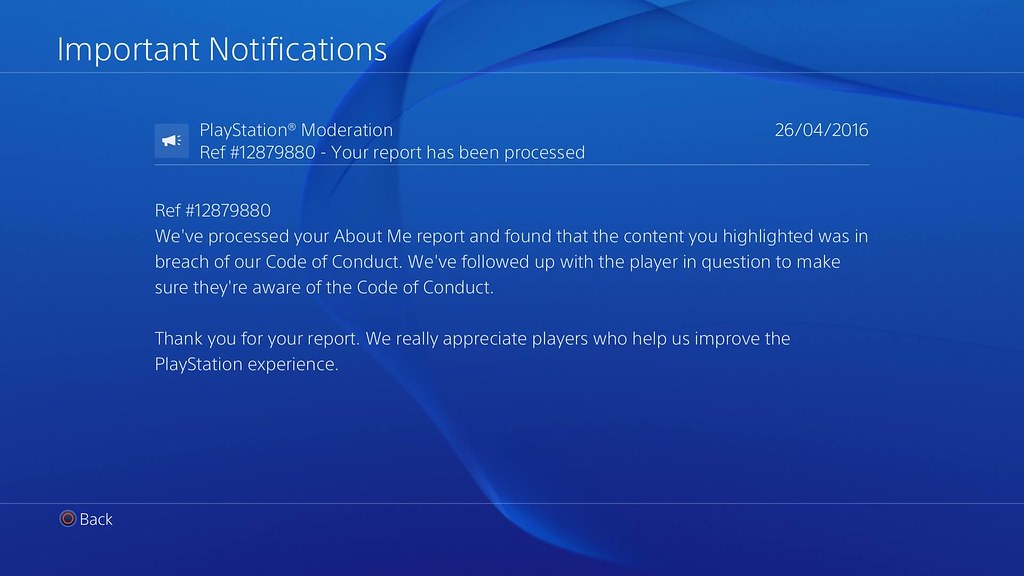

Around the same time we also implemented system messaging for reports, both to let you know when we’d received your report and to notify you once we’d made our decision.

…and its immediate positive impact on reporting as a result

We immediately saw a hugely positive impact from these changes.

For one thing, by allowing you to better understand how and when to report content to us, we saw the accuracy of the reports we received improve – for example, far fewer of you were now reporting Online IDs that, after our review, did not seem to contain any Code of Conduct violations.

This meant that on average the time it took us to respond to your reports reduced considerably, allowing us to solve the issues that really mattered to you quicker.

One year on and these changes are still yielding further improvement: from the information you told us about in the free text part of your reports, we were able to make a change in System Software 5.00 that made it easier for you to find the report option in Messages.

Whilst nothing is ever perfect, and we continue to look for new ways to improve all of our PSN safety features, we hope you agree that this was a significant step in the right direction.

In our next article on safety, I’ll provide more information on what we do once we receive your reports – and hopefully bust some myths that have developed around the subject.

Read more in our “Helping you stay safe on PlayStation Network” series:

Join the Conversation

Add a CommentBut don't be a jerk!

10 Comments

Loading More Comments